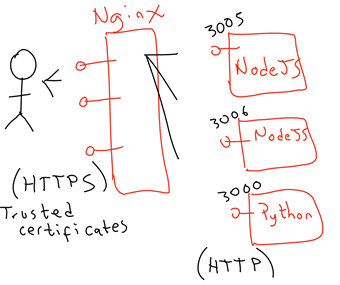

An ongoing trend, as organisations increasingly adopt cloud-native development approaches, is from centralised services, to widely distributed services. This shift requires a rethink about how access management is delivered, in terms of aligning with this model in development practice, as well as accommodating the radical effect that this has on deployment architecture. I have previously alluded to this shift as being from ‘perimeter-based access management’ to ‘centralised access management’, and how this is able to accommodate the increasing adoption of distributed infrastructure and services.

I initially began writing a post which would explore this concept in more detail, though it quickly grew beyond what could be easily captured within a single post. As a result, in order to give the topic justice, I will break this into a number of posts, beginning, in this post, by exploring what Access Management even looks like in this sort of architecture, then going into more detail about strategies for implementing a micro-services approach to access management.

Continue reading “Access Management and Micro-services – Part 1: Overview”